Intent Classification Using LLM Source Files

- conversations_source1.xlsx

- intentClassificationUsingLLM.py

- intent_classification_using_LLM_performance.ipynb

- intent_classification_using_LLM_performance.html

- Download the source files using this link

The Promise of LLMs in Intent Classification

LLMs hold the promise of streamlining intent classification without the exhaustive need for labeled datasets, thus overcoming a significant hurdle faced by traditional NLU systems. The application of LLMs in this domain is not just about leveraging their ability to generate content but to utilize their deep linguistic and contextual understanding to accurately classify user intents.

The exploration into LLMs for intent classification introduces several cutting-edge methodologies, such as zero-shot learning, one-shot, and few-shot learning, as well as retrieval-augmented generation (RAG). These approaches offer varying degrees of flexibility and adaptation to new or evolving user queries without the need for extensive prior examples.

Particularly intriguing is the concept of zero-shot learning, where the model classifies intents without any prior specific examples. This approach has the potential to not only optimize the classification process but also enhance the virtual assistant’s capability to address novel queries, thereby elevating the overall user experience.

Integrating GPT-4 and Conversation History

Incorporating detailed roles and the conversation history into the framework for using LLMs like GPT-4 can significantly enhance the precision and relevance of intent classification. By providing clear definitions and context, these models can better navigate the nuances of conversation, leading to more accurate intent recognition—a key component in sophisticated conversational AI systems.

This exploration into the use of LLMs for intent classification is not just an academic exercise but a practical investigation into how we can leverage cutting-edge AI to deliver more responsive, efficient, and accurate customer interactions. As we delve deeper into the capabilities of LLMs, the goal is to uncover methodologies that can transform the landscape of customer service, making digital interactions more intuitive and satisfying for users everywhere.

Implementation – A Structured Approach

System Role Definition

Setting up the “System” role is pivotal, as it clearly defines the LLM’s primary function as an “Intent Classifier for Virtual Assistant Conversations.” This involves providing concise descriptions of the virtual assistant’s role, its representative entity, its tasks, and a list of potential intents to select from. These descriptions are instrumental in enabling the LLM to grasp the subtleties of different intents using its extensive pre-training, without relying on explicit examples.

Assistant and User Role Integration

The “Assistant” role contextualizes the conversation, presenting the virtual assistant’s contributions to the conversation, which is crucial for the LLM to follow the conversation’s progression.

The “User” role, centered on the customer’s latest respond (input), is key to the intent classification process. Positioning this input within the broader conversation context, as defined by the Assistant and System roles, allows the LLM to more accurately deduce the user’s intent.

Messages

The input to the model is structured as an array of message objects, each with a specified role and content. These roles include the system (defining the task and intent descriptions), the assistant (providing the last response from the virtual assistant), and the user (supplying the user’s latest response to be classified).

messages = [

# Example of system role

{ "role": "system",

"content": "Role: Intent Classifier for Virtual Assistant Interactions.

Task: Identify and classify user intents from their inputs with a virtual assistant, aligning each with a specific intent from the list provided.

Context: This dialogue involves a virtual assistant and a customer discussing appointments, encompassing requests for scheduling, cancelling, rescheduling, obtaining details about the appointment, and other as listed below.

Predefined Intents:

cancel appointment: User wants to cancel the appointment.

reschedule appointment: User intends to change / reschedule the appointment.

...

out of scope: Applies when the user's request doesn't fit any listed intents or falls beyond the assistant's scope, or the intent is ambiguous.

Your objective is to accurately map each user responses to the relevant intent.

Output Example: {"intent": "cancel appointment"} "},

# Example of assistant response

{ "role": "assistant", "content": "Your appointment is scheduled for Monday 2/26/24 at 8AM. Let us know if you want to confirm, cancel or reschedule?"},

# Example of user response

{ "role": "user", "content": "Can we do it later that week? I have another engagement at that time."}

]

Calling OpenAI GPT-4 Turbo model with Messages

The method below showcases the integration of an advanced AI model like GPT-4 into an intent classification framework with minimal reliance on pre-labeled data. Leveraging the model’s inherent knowledge and contextual comprehension enables the prediction of intents in a highly efficient manner, streamlining the preparation and maintenance involved in conversational AI systems.

# Call the OpenAI model with the messages.

completion = openai.ChatCompletion.create(

model='gpt-4-1106-preview',

max_tokens=2000,

messages=messages,

temperature=1,

top_p=1,

frequency_penalty=0.5,

presence_penalty=0

)

Extract and Process the Response

Once the model generates a completion, the intent is extracted from the content of the response. It’s assumed that the model’s output is in JSON format, containing an “intent” field. The response is parsed, and the intent is retrieved and converted to lowercase for consistent handling.

# Process the OpenAI response to extract the classified intent.

response = completion['choices'][0]['message']['content']

data = json.loads(response)

openai_intent = data['intent']

# Output the classified intent.

print(f"The classified intent is: {openai_intent}")

Initial Testing of LLMs for Intent Classification

In the preliminary evaluation conducted, the OpenAI GPT-4 Turbo LLM was employed to classify intents within the context of conversational AI, with a focus on a dataset that comprised 500 interactions between customers and a virtual assistant tasked with appointment management. The dataset was organized to include responses from the assistant, queries from users, and the correct intent labels across 20 distinct intent categories.

Each user response was processed through the LLM, and the intents predicted by the model were then compared with the actual labeled intents to evaluate the model’s accuracy. The counts of input and output tokens for each interaction were also monitored as part of the test

Main Observations

The average input prompt comprised approximately 1000 tokens, with the model’s responses averaging 10 tokens, formatted as JSON (e.g., {“intent”: “specific intent”}).

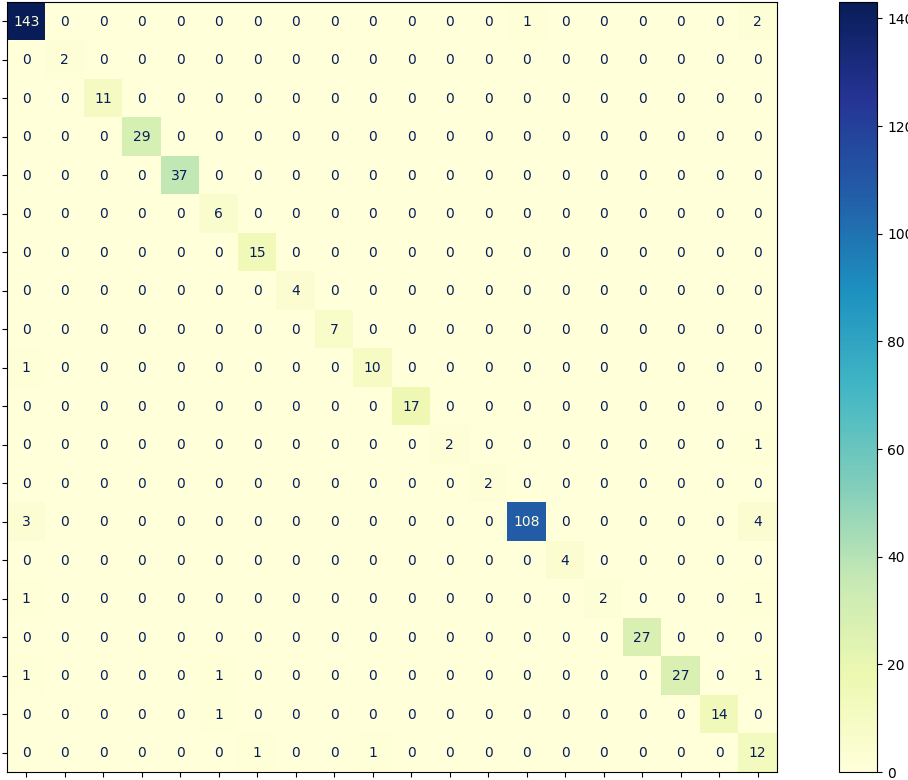

The confusion matrix of actual vs predicted label is presented below.

Performance Metrics

The “model’s” performance metrics indicate a high level of effectiveness in intent classification, reflecting its ability to understand and categorize user intents accurately:

Accuracy (0.96): This metric indicates that 96% of the predictions were correct. High accuracy suggests that the model is highly reliable in classifying intents correctly across a broad range of user inputs.

Recall (0.93): With a recall rate of 93%, it is adept at identifying relevant instances across all categories. This means it successfully captured a large proportion of relevant intents, reducing the likelihood of missed or unrecognized intents.

Precision (0.96): Precision at 96% signifies that when it predicts a specific intent, it is correct 96% of the time. This high precision rate is crucial in scenarios where the cost of false positives is high, ensuring that responses generated or actions taken based on the classified intent are appropriate and accurate.

F1 Score (0.94): The F1 Score combines precision and recall into a single metric, offering a balanced view of the overall performance. An F1 Score of 0.94 is excellent and indicates a strong balance between precision and recall, suggesting the model is both accurate and consistent in its intent classification.

Overall, these metrics suggest that the LLM is highly effective at classifying intents, making it a robust solution for conversational AI applications where understanding user intent is critical.

Cost Consideration

While the LLM performance in intent classification is impressively high, it’s important to also consider the cost implications, especially in scenarios involving multiple interactions within a single conversation. The cost for classifying a single intent has been calculated at $0.0103, derived from OpenAI’s pricing structure of $0.01 per 1K tokens for input and $0.03 per 1K tokens for output. (March 2024)

Given that a typical conversation between a virtual agent and a user may involve several rounds of back-and-forth interactions, each requiring intent classification, the cumulative cost per conversation could become significant. This aspect is crucial for businesses to consider when evaluating the feasibility and scalability of deploying LLMs in customer service and engagement platforms, where high volumes of interactions are the norm. Balancing the benefits of high accuracy and efficiency against the potential costs will be key in making informed decisions about the integration of these technologies.

General Recommendations and Conclusions

The initial test underscores the substantial benefits LLM offers in terms of classifying intents and emerge as a potentially alternative in the long term, given its scalable and flexible nature. The promising results from this preliminary assessment advocate for a deeper exploration into LLMs’ role within conversational AI as intent classifier, emphasizing their potential to deliver precise, and efficient solutions for enhancing the conversational experience.

In light of the model’s high performance and the associated cost considerations for intent classification, It is exploring additional strategies to optimize efficiency and manage expenses is recommended. Beyond the core recommendation of leveraging Large Language Models (LLMs) for intent classification, other approaches should be considered:

Minimizing Input Prompts: Streamlining the content and structure of input prompts can reduce the number of tokens processed, lowering costs without compromising the quality of intent classification.

Fine-Tuning the Model: Customizing the model to the specific use case and requirements of your conversational AI application can improve performance and potentially reduce the number of tokens required for accurate intent classification.

Utilizing Retrieval-Augmented Generation (RAG): Incorporating RAG methods can enhance the LLM ability to classify the intent accurately by combining the benefits of retrieval-based and generation-based approaches.

Exploring Other Methods: Investigating additional techniques and methodologies in AI and machine learning can uncover new ways to improve accuracy, efficiency, and cost-effectiveness in intent classification tasks. By adopting a trying out multiple approaches, organizations can better balance the trade-offs between performance and cost, ensuring a scalable and effective deployment of conversational AI systems.

Recommendations with Considerations for Scope of Application

The use of LLM for intent classification is recommended for chatbots and virtual agents handling a limited scope of intents (probably less than 20 or 30 intents). This approach offers high accuracy and efficiency for simpler systems. However, for applications dealing with a broader array of intents, exceeding hundred, caution is advised due to potential complexities, lower classification accuracy and increased resource demands.